Flash memory

by Chris Woodford. Last updated: November 14, 2022.

Imagine if your memory worked only while you were awake. Every morning when you got up, your mind would be completely blank! You'd have to relearn everything you ever knew before you could do anything. It sounds like a nightmare, but it's exactly the problem computers have. Ordinary computer chips "forget" everything (lose their entire contents) when the power is switched off. Large personal computers get around this by having powerful magnetic memories called hard drives, which can remember things whether the power is on or off. But smaller, more portable devices, such as digital cameras and MP3 players, need smaller and more portable memories. They use special chips called flash memories to store information permanently. Flash memories are clever—but rather complex too. How exactly do they work?

Photo: Turn a digital camera's flash memory card over and you can see the electrical contacts that let the camera connect to the memory chip inside the protective plastic case.

Sponsored links

Contents

How computers store information

Computers are electronic machines that process information in digital format. Instead of understanding words and numbers, as people do, they change those words and numbers into strings of zeros and ones called binary (sometimes referred to as "binary code"). Inside a computer, a single letter "A" is stored as eight binary numbers: 01000001. In fact, all the basic characters on your keyboard (the letters A–Z in upper and lower case, the numbers 0–9, and the symbols) can be represented with different combinations of just eight binary numbers. A question mark (?) is stored as 00111111, a number 7 as 00110111, and a left bracket ([) as 01011011. Virtually all computers know how to represent information with this "code," because it's an agreed, worldwide standard. It's called ASCII (American Standard Code for Information Interchange).

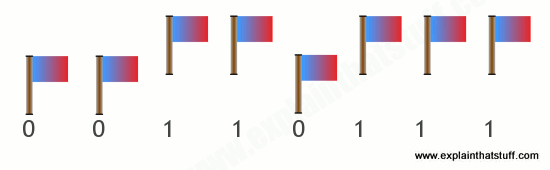

Computers can represent information with patterns of zeros and ones, but how exactly is the information stored inside their memory chips? It helps to think of a slightly different example. Suppose you're standing some distance away, I want to send a message to you, and I have only eight flags with which to do it. I can set the flags up in a line and then send each letter of the message to you by raising and lowering a different pattern of flags. If we both understand the ASCII code, sending information is easy. If I raise a flag, you can assume I mean a number 1, and if I leave a flag down, you can assume I mean a number 0. So if I show you this pattern:

You can figure out that I am sending you the binary number 00110111, equivalent to the decimal number 55, and so signaling the character "7" in ASCII.

What does this have to do with memory? It shows that you can store, or represent, a character like "7" with something like a flag that can be in two places, either up or down. A computer memory is effectively a giant box of billions and billions of flags, each of which can be either up or down. They're not really flags, though—they are microscopic switches called transistors that can be either on or off. It takes eight switches to store a character like A, 7, or [. It takes one transistor to store each binary digit (which is called a bit). In most computers, eight of these bits are collectively called a byte. So when you hear people say a computer has so many megabytes of memory, it means it can store roughly that many million characters of information (mega means million; giga means thousand million or billion).

What is flash memory?

Photo: A typical USB memory stick—and the flash memory chip you'll find inside if you take it apart (the large black rectangle on the right).

Ordinary transistors are electronic switches turned on or off by electricity—and that's both their strength and their weakness. It's a strength, because it means a computer can store information simply by passing patterns of electricity through its memory circuits. But it's a weakness too, because as soon as the power is turned off, all the transistors revert to their original states—and the computer loses all the information it has stored. It's like a giant attack of electronic amnesia!

Memory that "forgets" when the power goes off is called Random Access Memory (RAM). There is another kind of memory called Read-Only Memory (ROM) that doesn't suffer from this problem. ROM chips are pre-stored with information when they are manufactured, so they don't "forget" what they know when the power is switched on and off. However, the information they store is there permanently: they can never be rewritten again. In practice, a computer uses a mixture of different kinds of memory for different purposes. The things it needs to remember all the time—like what to do when you first switch it on—are stored on ROM chips. When you're working on your computer and it needs temporary memory for processing things, it uses RAM chips; it doesn't matter that this information is lost later. Information you want a computer to remember indefinitely is stored on its hard drive. It takes longer to read and write information from a hard drive than from memory chips, so hard drives are not generally used as temporary memory. In gadgets like digital cameras and small MP3 players, flash memory is used instead of a hard drive. It has certain things in common with both RAM and ROM. Like ROM, it remembers information when the power is off; like RAM, it can be erased and rewritten over and over again.

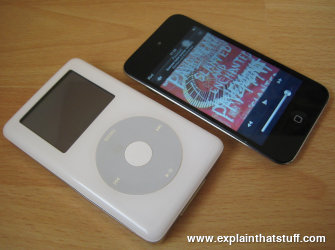

Photo: Apple iPods, past and present. The white one on the left is an old-style classic iPod with a 20GB hard drive memory. The newer black model on the right has a 32GB flash memory, which makes it lighter, thinner, more robust (less likely to die if you drop it), and less power hungry.

How flash memory works—the simple explanation

Photo: A typical secure digital (SD) card from a digital camera. Inside, there's a flash memory chip. How does it work? Read on!

Flash works using an entirely different kind of transistor that stays switched on (or switched off) even when the power is turned off. A normal transistor has three connections (wires that control it) called the source, drain, and gate. Think of a transistor as a pipe through which electricity can flow as though it's water. One end of the pipe (where the water flows in) is called the source—think of that as a tap or faucet. The other end of the pipe is called the drain—where the water drains out and flows away. In between the source and drain, blocking the pipe, there's a gate. When the gate is closed, the pipe is shut off, no electricity can flow and the transistor is off. In this state, the transistor stores a zero. When the gate is opened, electricity flows, the transistor is on, and it stores a one. But when the power is turned off, the transistor switches off too. When you switch the power back on, the transistor is still off, and since you can't know whether it was on or off before the power was removed, you can see why we say it "forgets" any information it stores.

A flash transistor is different because it has a second ("floating") gate above the first one. When the gate opens, some electricity leaks up the first gate and stays there, in between the first gate and the second one. Even if the power is turned off, the electricity is still there between the two gates. Now if you try to pass a current through, the stored electricity stops it from flowing so, in this state, the transistor stores a zero. If you clear the stored electricity, current can flow through once again; in this state, the transistor stores a one. That's how a flash transistor stores its information whether the power is on or off..

How flash memory works—a more complex explanation

That's a very glossed over, highly simplified explanation of something that's extremely complex. If you want more detail, it helps if you read our article about transistors first, especially the bit at the bottom about MOSFETs—and then read on.

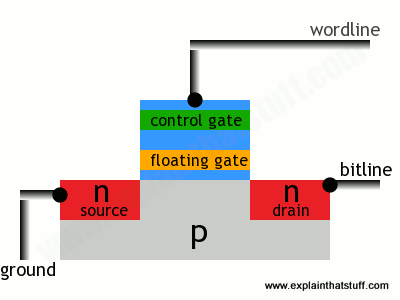

The transistors in flash memory are like MOSFETs only they have two gates on top instead of one. This is what a flash transistor looks like inside. You can see it's an n-p-n sandwich with two gates on top, one called a control gate and one called a floating gate. The two gates are separated by oxide layers through which current cannot normally pass:

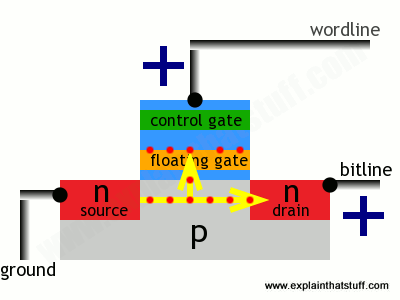

How do we use this to store data? Both the source and the drain regions are rich in electrons (because they're made of n-type silicon), but electrons cannot flow from source to drain because of the electron deficient, p-type material between them. If we apply a positive voltage to the transistor's two contacts, called the bitline and the wordline, electrons get pulled in a rush from source to drain. A few also manage to wriggle through the oxide layer by a process called tunneling and get stuck on the floating gate:

The electrons will stay on the floating gate indefinitely, even when the positive voltages are removed and whether there is power supplied to the circuit or not. If we disconnect the positive voltages from the bitline and wordline and try to pass a current through the transistor, from source to drain, none will flow: the electrons on the floating gate will stop it. So, in this state, we say the transistor is storing a zero. The electrons on the floating gate can be flushed out by putting a negative voltage on the wordline. This repels the electrons back the way they came, clearing the floating gate and allowing a current to flow through the transistor once again. In this state, we say the transistor is storing a one.

Not an easy process to understand, but that's how flash memory works its magic!

Sponsored links

How long does flash memory last?

Flash memory eventually wears out because its floating gates take longer to work after they've been used a certain number of times. It's very widely quoted that flash memory degrades after it's been written and rewritten about "10,000 times," but that's misleading. According to a 1990s flash patent by Steven Wells of Intel, "although switching begins to take longer after approximately ten thousand switching operations, approximately one hundred thousand switching operations are required before the extended switching time has any affect on system operation." Whether it's 10,000 or 100,000, it's usually fine for a USB stick or the SD memory card in a digital camera you use once a week, but less satisfactory for the main storage in a computer, cellphone, or other gadget that's in daily use for years on end. One practical way around the limit is for the operating system to ensure that different bits of flash memory are used each time information is erased and stored (technically, this is called wear-leveling), so no bit is erased too often. In practice, modern computers might simply ignore and "tiptoe round" the bad parts of a flash memory chip, just like they can ignore bad sectors on a hard drive, so the real practical lifetime limit of flash drives is much higher: somewhere between 10,000 and 1 million cycles. Cutting-edge flash devices have been demonstrated that survive for 100 million cycles or more.

Who invented flash memory?

Flash was originally developed by Toshiba electrical engineer Fujio Masuoka, who filed US Patent 4,531,203 on the idea with colleague Hisakazu Iizuka back in 1981. Originally known as simultaneously erasable EEPROM (Electrically Erasable Programmable Read-Only Memory), it earned the nickname "flash" because it could be instantly erased and reprogrammed—as fast as a camera flash. At that time, state-of-the-art erasable memory chips (ordinary EPROMS) took 20 minutes or so to wipe for reuse with a beam of ultraviolet light, which meant they needed expensive, light-transparent packaging. Cheaper, electrically erasable EPROMS did exist, but used a bulkier and less efficient design that required two transistors to store each bit of information. Flash memory solved these problems.

Photo: Above: Erasable memory before flash: EPROM chips had little round windows in the top through which you could erase their contents using a lengthy blast of UV light. If you're interested, this one's a 32KB (kilobyte) AMD AM27C256 dating from 1986, so it has about 1000 times less storage capacity than even the small 32MB (megabyte) SD card in the top photo. Below: A closeup of the UV-transparent window and the chip wired inside the package.

Toshiba released the first flash chips in 1987, but most of us didn't come across the technology for another decade or so, after SD memory cards first appeared in 1999 (jointly supported by Toshiba, Matsushita, and SanDisk). SD cards allowed digital cameras to record hundreds of photos and made them far more useful than older film cameras, which were limited to taking about 24–36 pictures at a time. Toshiba launched the first digital music player using an SD card the following year. It took Apple a few more years to catch up and fully embrace flash technology in its own digital music player, the iPod. Early "classic" iPods all used hard drives, but the release of the tiny iPod Shuffle in 2005 marked the beginning of a gradual switchover, and all modern iPods and iPhones now use flash memory instead.

What's the future for flash memory?

Flash has rapidly overtaken magnetic storage over the last decade or so; in everything from supercomputers and laptops to smartphones and iPods, hard drives have increasingly given way to fast, compact SSDs (solid-state drives) based on flash chips. That trend has been driven by—and helped to drive—another one: the shift from desktop computers and landline phones to mobile devices (smartphones and tablets) and cellphones, which need ultra-compact, high-density, extremely reliable memories that can withstand the stresses and strains of being thrown around in our backpacks and briefcases. These trends are now favoring 3D flash ("stacked") technology, developed in the early 2000s and formally launched by Samsung in 2013, in which dozens of different layers of memory cells can be grown on the same silicon wafer to increase storage capacity (just like the multiple floors of a high-rise office block let us pack more offices into the same area of land). Instead of using floating gates (as described above), 3D flash uses an alternative (though sometimes less reliable) technique called charge-trap, which allows us to engineer much higher capacity memories in the same amount of space, well into the terabit (Tbit) scale (1 trillion bits = 1,000,000,000,000 bits).

About the author

Chris Woodford is the author and editor of dozens of science and technology books for adults and children, including DK's worldwide bestselling Cool Stuff series and Atoms Under the Floorboards, which won the American Institute of Physics Science Writing award in 2016. You can hire him to write books, articles, scripts, corporate copy, and more via his website chriswoodford.com.